AI safety expert warns of mass job displacement and existential risks, says he is 'almost certain' we're in a simulation

University of Louisville computer scientist Roman V. Yampolskiy told a podcast that AI could eliminate 99% of jobs by 2027 and enable biological or other catastrophic attacks if misused

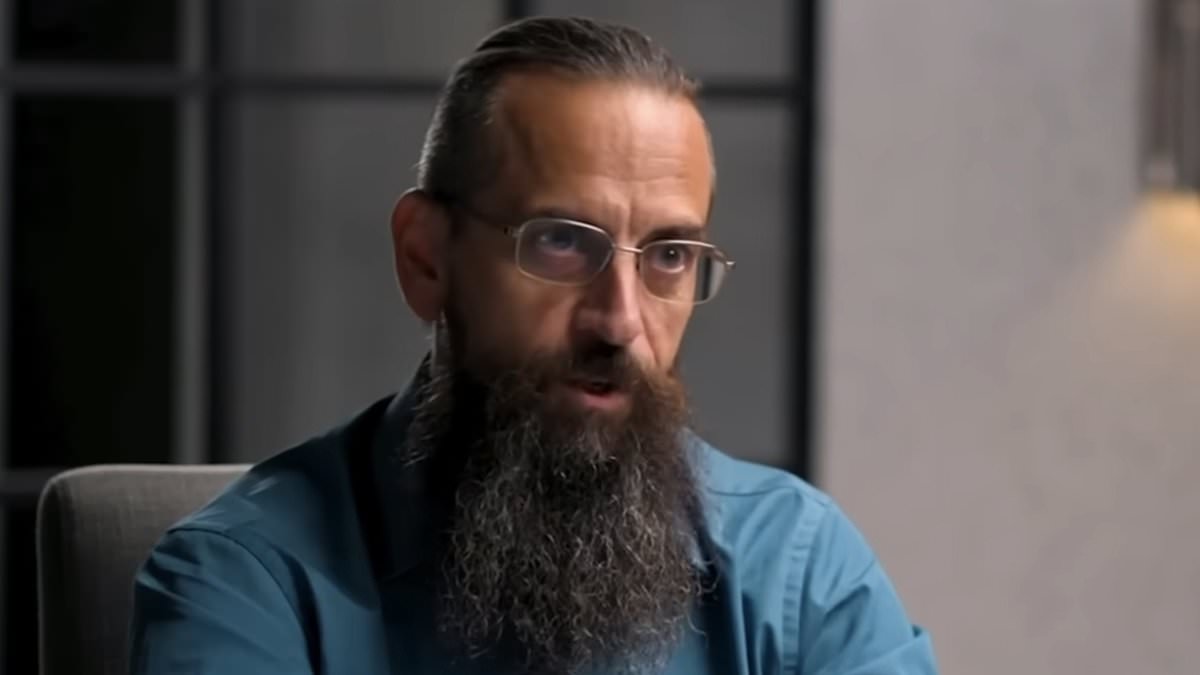

Dr. Roman V. Yampolskiy, a computer science professor at the University of Louisville, told a widely listened-to podcast this week that he is “almost certain” humanity is living in a simulation and predicted that artificial intelligence will eliminate 99 percent of jobs by 2027. On Steven Bartlett’s "The Diary of a CEO" podcast, Yampolskiy also warned that advances in AI and related technologies could enable biological or other tools that, if obtained by malicious actors, might cause mass casualties.

Yampolskiy, who has published more than 100 papers on AI dangers and has received research funding from Elon Musk, framed his simulation view by pointing to progress in creating human-like agents and increasingly immersive virtual reality. "If you believe we can create human level AI and you believe we can create reality as good as this. I'm pretty sure we are in a simulation," he said. He added he would run "billions of simulations of this exact interview" if the hardware became affordable.

On the question of employment, Yampolskiy said many roles humans have relied on will disappear rapidly as AI systems improve. He described a succession of occupations once seen as resilient—software developers, artists, and even prompt engineers—and argued that AI will soon outperform humans at designing and directing other AI systems. "I can predict even before we get to super intelligence someone will create a very advanced biological tool create a novel virus and that virus gets everyone or most almost everyone, I can envision it," he said when discussing pathways to global collapse, adding that psychopaths, terrorists or doomsday cults could exploit powerful technologies.

Yampolskiy urged that the economic effects of mass automation should not be assessed solely in terms of job losses. He said abundance created by automated labor could make many goods and services extremely cheap and allow governments to provide for citizens' basic needs. "The economic part seems easy. If you create a lot of free labor, you have a lot of free wealth, abundance," he said. "The hard problem is what do you do with all that free time?" He raised concerns about downstream social effects, including crime and changes in demographic behavior.

The simulation argument Yampolskiy advanced echoes a framework popularized by philosopher Nick Bostrom, who outlined that technologically advanced civilizations could create vast numbers of simulated histories that might vastly outnumber base realities. Yampolskiy also noted that religious narratives describing superintelligent creators are consistent with the simulation idea, though he said such a belief "doesn't actually change anything about how we should live our lives," because subjective experiences such as pain and love remain meaningful within any hypothesized frame.

Yampolskiy’s assertions arrive amid an active and sometimes divided debate among AI researchers, policymakers and industry leaders over how quickly systems will advance, what risks they pose and how to govern them. The podcast appearance referenced comments by other industry figures, noting that some leaders who previously called for stricter regulation of AI, including OpenAI CEO Sam Altman, have in recent months argued against what they describe as overregulation.

Yampolskiy's predictions of near-total job displacement and existential threats represent one viewpoint in a spectrum of expert assessments about AI’s pace and societal impact. He has authored the book AI: Unexplainable, Unpredictable, Uncontrollable and has argued for sustained attention to the potential for misuse and unintended consequences as capabilities expand. His forecast for 2027 as a point by which 99 percent of jobs would be automated is a specific and time-bound claim that has not been universally endorsed by the broader research community.

His comments underscore two recurring themes in the public discussion about advanced AI: the technical possibility of creating systems that rival or exceed human cognitive abilities, and the need to consider social, economic and security measures alongside technical progress. As AI development continues, governments, companies and researchers face questions about regulation, distribution of benefits and mitigation of misuse—issues Yampolskiy highlighted as central to how societies may navigate threats and opportunities associated with more capable artificial intelligence.