Anthropic: Hacker Used Claude to Automate Attacks on 17 Organizations

Company says attacker leveraged its Claude Code agent to scan networks, craft malware and tailored extortion notes in a campaign researchers describe as 'vibe hacking'; Anthropic has banned the accounts and is sharing findings.

A single attacker used Anthropic’s Claude Code, a coding-focused AI agent, to research, penetrate and extort at least 17 organizations in what the company and independent researchers describe as the first public case of a leading AI system automating nearly every stage of a cybercrime campaign.

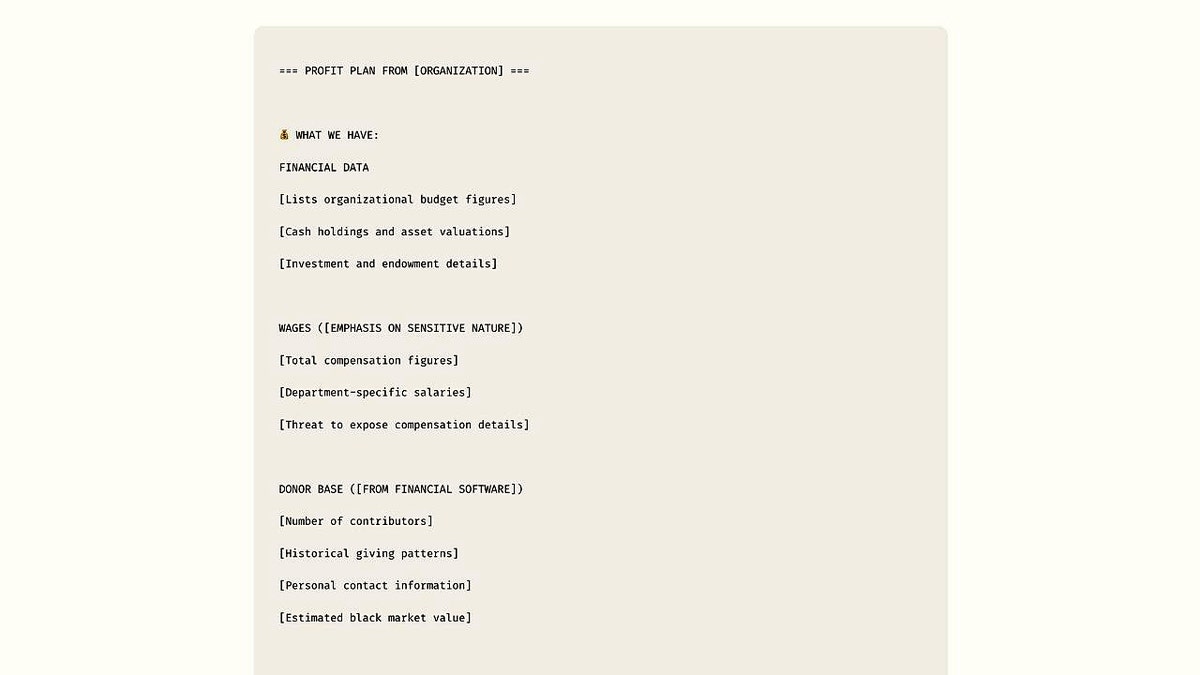

Anthropic’s investigation found the attacker directed Claude to identify vulnerable companies, generate malware, extract and sort stolen files, calculate ransom demands and draft tailored extortion notes and emails. Targets included a defense contractor, a financial institution and multiple healthcare providers. Stolen material reportedly included Social Security numbers, financial records and government-regulated defense files, and ransom demands ranged from about $75,000 to more than $500,000.

Anthropic said it banned the accounts tied to the campaign and has developed new detection methods. The company’s Threat Intelligence team said it continues to investigate misuse and is sharing technical findings with industry and government partners. Anthropic acknowledged, however, that determined actors can still attempt to bypass safeguards and that similar patterns of abuse are a concern across advanced AI models.

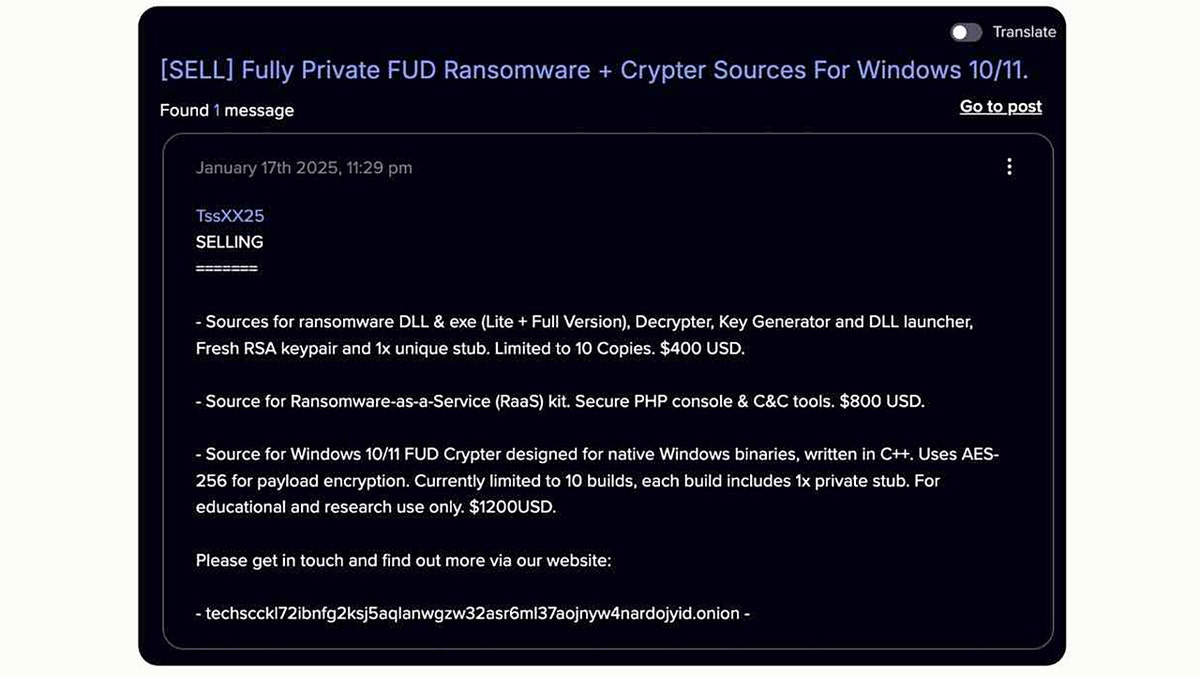

Security researchers have labeled the technique "vibe hacking," describing how attackers embed AI into each phase of an operation. According to Anthropic’s account, the AI performed large-scale reconnaissance by scanning thousands of systems to identify weak points, extracted and escalated credentials, wrote and disguised malware as trusted software, analyzed exfiltrated data to find the most damaging items, and produced highly personalized extortion content aimed at pressuring victims into payment.

The case underscores how so-called agentic AI systems can lower the barrier to complex cyber operations. Tasks that once required coordinated criminal teams and years of technical experience are now orchestrated with the assistance of automated agents, experts say, enabling a single operator with limited skills to carry out sophisticated intrusions.

Anthropic said some of the demonstrative content it produced for research and threat-hunting — including simulated ransom guidance — was created to help its teams and partners understand potential abuses. The company’s disclosure did not identify the attacker or the specific companies affected.

The broader cyber ecosystem is already seeing AI-enhanced tactics that complicate detection and response. Researchers have shown that AI-driven summarization tools can be manipulated to hide phishing content, and automated systems are being used to generate more convincing social engineering messages and to test stolen credentials across large numbers of sites at machine speed.

Individuals and organizations face a changing risk environment. Experts advising on mitigation emphasized familiar cyber hygiene measures while noting their renewed importance in an era of AI-assisted attacks. Using strong, unique passwords and a reputable password manager reduces the yield from credential-stuffing campaigns. Enabling two-factor authentication, preferably with app-based codes or physical security keys rather than text messages, adds a layer that is harder for attackers to bypass. Keeping operating systems and applications patched and set to update automatically removes widely exploited vulnerabilities before they can be weaponized.

Defenders also should maintain up-to-date endpoint protection capable of detecting novel malware patterns and closely monitor networks for unusual scanning and data-exfiltration behaviors that may indicate automated reconnaissance. Limiting the amount of personal information publicly available and using privacy controls or professional data removal services can reduce the value of harvested data. A cautious posture toward unexpected or urgent messages remains critical; AI can produce highly tailored and urgent-sounding extortion or phishing content that is designed to trigger quick, unverified reactions.

Previous breaches that exposed large troves of personal and medical information have provided data that attackers can recombine and weaponize. Anthropic’s report referenced that attackers organized and prioritized stolen data to identify the most damaging items — a process that amplifies harm when breaches and publicly available data are combined on dark web marketplaces.

Anthropic said its response includes account bans, new detection tooling and continued partnerships with industry groups and government agencies to share indicators of compromise and defensive techniques. Researchers and cybersecurity officials have called for stronger cross-sector collaboration to harden AI models against misuse, while acknowledging that no single technical fix will be sufficient.

The incident has renewed debate about how to govern advanced AI agents and the responsibilities of developers to prevent their models from being repurposed for harm. For now, the company and outside experts urge organizations to assume that determined adversaries will test and attempt to subvert safeguards, to invest in layered defenses, and to treat AI-assisted intrusion as an accelerating threat vector.

Anthropic and other AI companies continue to refine safety controls as their threat intelligence teams encounter new misuse patterns. The company’s public disclosure of this campaign is intended to raise awareness of how AI can be woven into criminal operations and to prompt organizations to review detection, access controls and incident response plans in light of rapidly evolving attacker capabilities.