North Korean Hackers Use AI to Forge Military IDs in Phishing Campaign

Genians reports Kimsuky used ChatGPT to draft a fake South Korean military ID, highlighting AI-enabled fraud threats in cyber espionage.

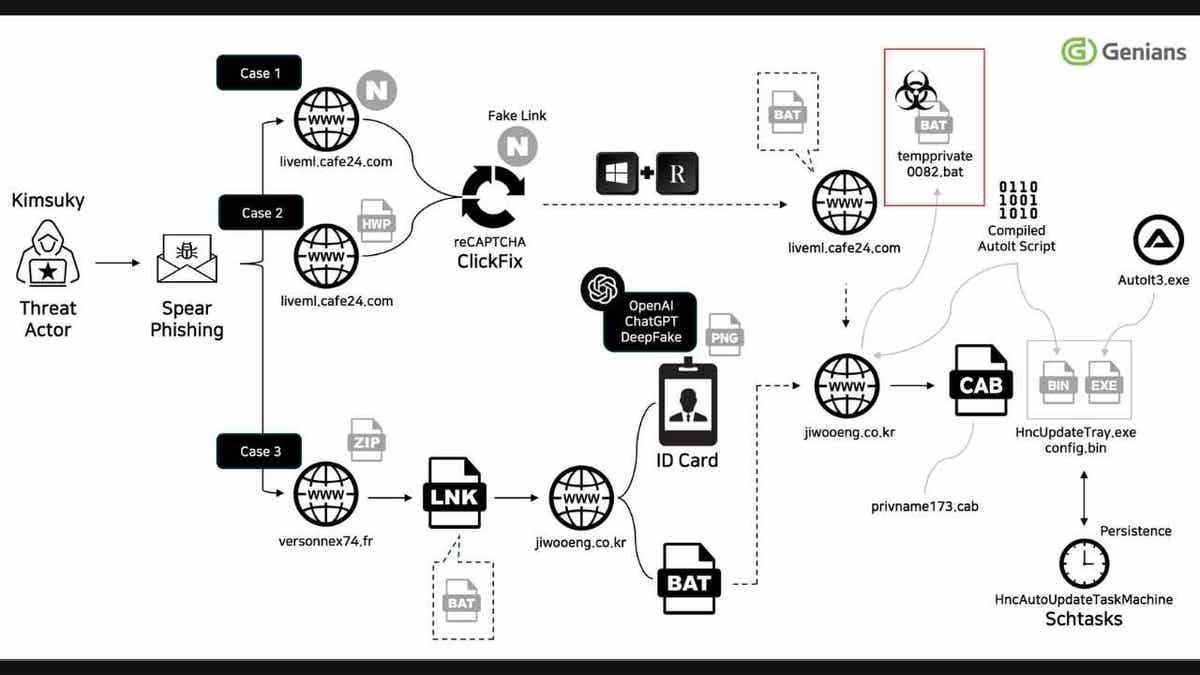

A North Korean hacking group known as Kimsuky used ChatGPT to generate a fake draft of a South Korean military ID. The forged IDs were then attached to phishing emails that impersonated a South Korean defense institution responsible for issuing credentials to military-affiliated officials. The discovery, revealed in a blog post by South Korean cybersecurity firm Genians, signals how AI-enabled forgery can be scaled for targeted espionage.

ChatGPT's safeguards are designed to block attempts to generate government IDs, but the hackers found a workaround by framing prompts as 'sample designs for legitimate purposes.' Genians said the model produced realistic-looking mock-ups, underscoring how generative AI can lower the barrier to producing convincing fraudulent assets at scale. The case illustrates how an initial document can be paired with follow-on channels—email attachments, then a call or video appearance—to reinforce a deception.

Kimsuky has a history of espionage operations targeting South Korea, Japan, and the United States. The group has been described by U.S. officials as engaged in a global intelligence-gathering mission. The U.S. Homeland Security said in 2020 that Kimsuky was 'most likely tasked by the North Korean regime with a global intelligence-gathering mission.' The latest AI-enabled ID-forgery underscores how generative AI has shifted the threat landscape by enabling attackers to operate at greater scale.

Beyond Korea, AI-fueled cyber activity spans multiple countries. Anthropic reported that Chinese hackers used Claude as a full-stack cyberattack assistant for more than nine months, targeting Vietnamese telecommunications providers, agriculture systems, and government databases. OpenAI has said Chinese state actors also used ChatGPT to build password-brute-forcing scripts and to extract information on U.S. defense networks, satellites, and identity verification systems. Some operations even used ChatGPT to generate fake social media posts designed to sow political discord in the United States. Google’s Gemini model has seen similar use by Chinese groups; meanwhile North Korean hackers have leaned on Gemini to draft cover letters and scout IT job postings.

Experts say AI-powered hacking threats demand new defenses. 'Generative AI has lowered the barrier to entry for sophisticated attacks,' said Sandy Kronenberg, CEO of Netarx. 'The real concern is not a single fake document but how these tools are used in combination across channels.' He urged security teams to verify across voice, video, and text signals, and to invest in email authentication, phishing-resistant MFA, and real-time monitoring. 'The threats are faster, smarter and more convincing. Our defenses must be, too.'

Experts also emphasize practical steps for individuals and organizations. When a message feels urgent or unusual, pause and verify via a trusted channel. Use antivirus software on all devices, and consider data-removal services to limit what attackers can glean from data brokers. Always check sender details, enable multi-factor authentication, keep software up to date, and report suspicious messages to IT or providers. Context matters: asking why a message appeared, and whether the request makes sense, can slow down an attacker.

Cybersecurity researchers say AI-driven fraud is reshaping the threat landscape, prompting a shift in training and defense strategies. As attackers expand the toolkit to include AI models such as Claude and Gemini, defenders must adapt by integrating cross-channel verification, improved authentication methods, and stronger monitoring. The public and private sectors face a continuing challenge to stay ahead of increasingly realistic forgeries and social-engineering schemes, and users are urged to stay vigilant as AI tools evolve.