Whistleblowers Tell Senate Meta Prioritized VR Profit Over Child Safety

Former Meta researchers say company suppressed internal findings showing children exposed to sexual content in virtual reality; Meta disputes the claims

Two former Meta researchers told a Senate subcommittee on Tuesday that the company put profits from its virtual-reality business ahead of protecting children, and that internal research showing minors were using Meta’s VR products and being exposed to sexually explicit material was shut down.

Cayce Savage, a former user experience researcher, told the Senate subcommittee on privacy and technology that she encountered instances of children being bullied, sexually assaulted and asked for nude photographs while using Meta’s VR platforms. "Meta cannot be trusted to tell the truth about the safety or use of its products," Savage said at the hearing. Jason Sattizahn, a former Reality Labs researcher, testified alongside Savage.

The testimony followed reporting that an internal Meta policy document permitted company chatbots to "engage a child in conversations that are romantic or sensual," a Reuters exclusive that has drawn additional scrutiny from lawmakers. When Sen. Marsha Blackburn (R-Tenn.) asked Sattizahn whether the Reuters report should surprise anyone, he responded, "No, not at all."

Savage and Sattizahn are among a group of current and former Meta employees whose accounts were first reported by The Washington Post on Monday. Savage said researchers were instructed not to investigate harms to children using Meta’s VR technology so the company could plausibly claim ignorance of the problem.

Meta responded in a statement through spokesperson Andy Stone, saying the whistleblower claims were "based on selectively leaked internal documents that were picked specifically to craft a false narrative," and that "there was never any blanket prohibition on conducting research with young people." Meta has previously said examples reported by Reuters were inconsistent with the company’s policies and had been removed.

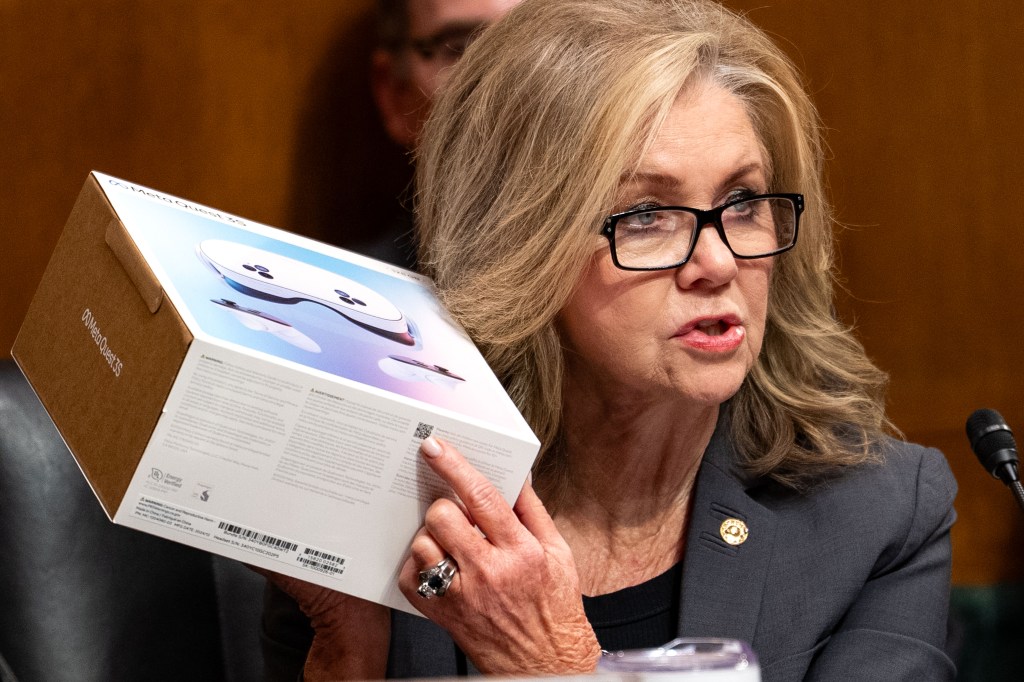

Senators pressed witnesses on whether the company’s internal practices and AI systems adequately protected minors. Blackburn said the whistleblowers’ accounts underscored the need for Congress to pass the Kids Online Safety Act, a bill she co-sponsored that passed the Senate last year but failed to advance in the House.

Witnesses described a range of harms encountered in virtual spaces operated by Meta, which develops VR hardware and software under its Reality Labs division. The testimony linked concerns about VR environments to broader worries about the application of AI and chatbot systems to interactions with minors, echoing recent reporting and congressional inquiries into tech platforms’ safety protocols.

Meta’s Reality Labs has invested heavily in augmented- and virtual-reality hardware and software as part of CEO Mark Zuckerberg’s push to build a so-called metaverse. Lawmakers at the hearing questioned whether business incentives to expand user engagement in immersive platforms could conflict with the company’s obligations to protect vulnerable users, particularly children.

In addition to the whistleblower testimony, senators cited the Reuters report and other internal documents as evidence that policies and enforcement around AI-driven chat systems and immersive social platforms require closer oversight. Meta has said it updates and enforces policies to prevent abuse, and has removed examples inconsistent with its rules.

The hearing comes amid increasing congressional attention to how major technology companies manage content, enforce safety standards and deploy AI. Lawmakers from both parties have raised concerns about the adequacy of current protections for children online and the transparency of internal company research and policies.

The subcommittee did not immediately announce further action following the testimonies. Lawmakers and witnesses said the disclosures highlighted gaps in industry practices and the need for clearer rules or legislation to address how AI systems and immersive technologies interact with underage users.