New Mexico AG blasts Meta over Instagram PG-13 rating, calls it dangerous promotional stunt

State attorney general questions Meta's safety claims amid ongoing lawsuit accusing the company of failing to shield minors online

New Mexico Attorney General Raúl Torrez on Monday denounced Meta’s new PG-13 rating system for Instagram, labeling the rollout a dangerous promotional stunt that could lull parents into a false sense of security about online risks facing children. In a letter to Mark Zuckerberg and Instagram chief Adam Mosseri, Torrez said Meta’s claims about protective features were false and urged the company to stop marketing teen accounts as PG-13 until meaningful safeguards are in place. The missive, a copy of which was exclusively obtained by The Post, underscores the attorney general’s broader fight with Meta over child safety tools and comes as the state pursues a civil case accusing Meta of failing to shield kids from adult content and predators.

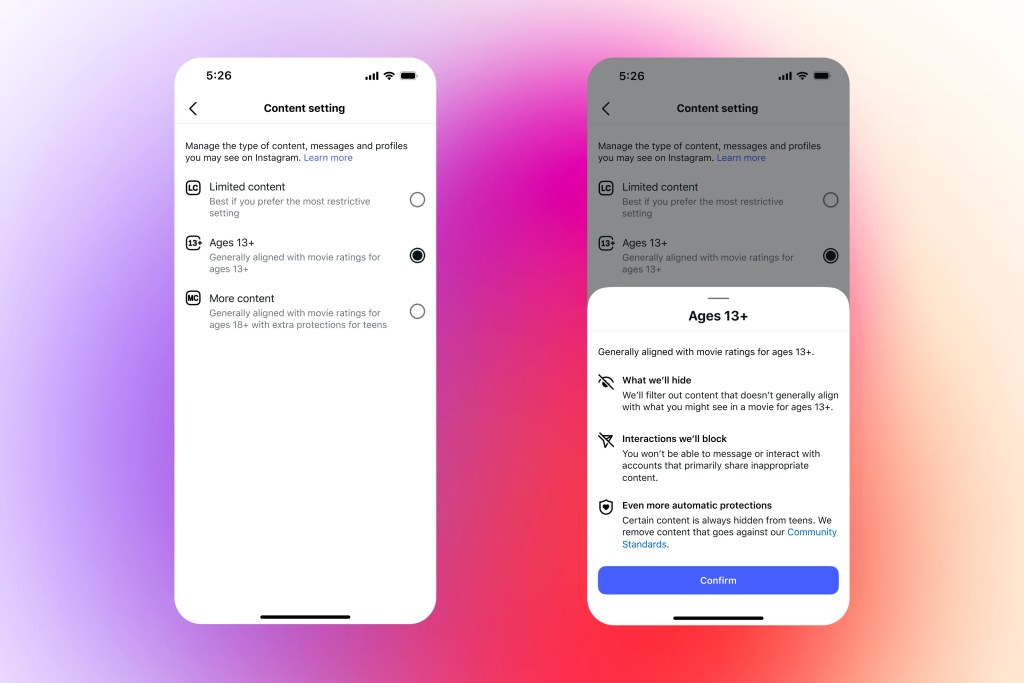

In October, Meta announced that teens on Instagram would automatically be placed under safety filters “guided by PG-13 movie ratings,” with the company saying posts featuring strong language, risky stunts, and content that could encourage harmful behavior would be filtered. Torrez argued that the Motion Picture Association, which has overseen the film rating system for decades, had called Meta’s use of the PG-13 label “literally false, deceptive, and highly misleading.” He said Meta’s approach depends on algorithms that promote content to young users in ways that can expose them to predators, and he demanded that Meta implement age verification and address harmful algorithms that proactively surface dangerous material.

Meta responded to The Post with a sharp rebuke, saying it strongly disagrees with the allegations and highlighting the substantive protections it says have already been built into its tools. “The only promotional stunt is this letter, which is littered with factual errors and misrepresentations and deliberately designed to distract from the meaningful changes and built-in protections we’ve introduced to help keep young people safe online,” Meta spokesman Andy Stone said. The company has pointed to ongoing safety features and policies aimed at limiting exposure to mature content by teens, while critics have pointed to independent findings about the effectiveness of those tools.

The New Mexico attorney general’s office filed the underlying civil lawsuit in state court late last year, and the case heads to trial on Feb. 2. Zuckerberg is named as a defendant alongside Meta in the suit, which alleges that the company repeatedly misled the public about the effectiveness of its safety tools and failed to protect children from sexual content and online predators on its platforms. The court record details a test set up by investigators in which four fictional children—created with AI-generated imagery that depicted ages 14 or younger—were used to monitor the flow of adult material and predator outreach on Instagram and Facebook. Prosecutors contend the test demonstrated how Meta’s recommendation algorithms could connect predators with minors at scale and enable harm.

The broader legal and regulatory backdrop includes scrutiny from other states and watchdog groups over Meta’s handling of teen safety tools. In September, Fairplay for Kids, a watchdog group focused on child safety online, published findings indicating that only about one in five safety features associated with Meta’s teen-accounts program performed effectively in real-world tests. The NM case, filed in late 2023, argues that Meta and Zuckerberg misled the public about the real-world effectiveness of its safety measures and calls for concrete protections, including independent oversight and stronger safeguards against unsafe content.

As the dispute unfolds, the case is likely to shape how states approach social-media safety labeling and the deployment of teen-protection tools on major platforms. The parties are slated for courtroom engagement ahead of the February trial date, and the outcome could influence how tech companies market safety features and how aggressively regulators pursue enforcement actions targeting online environments for minors.